Lawsuits try to hold AI companies accountable for defamatory content

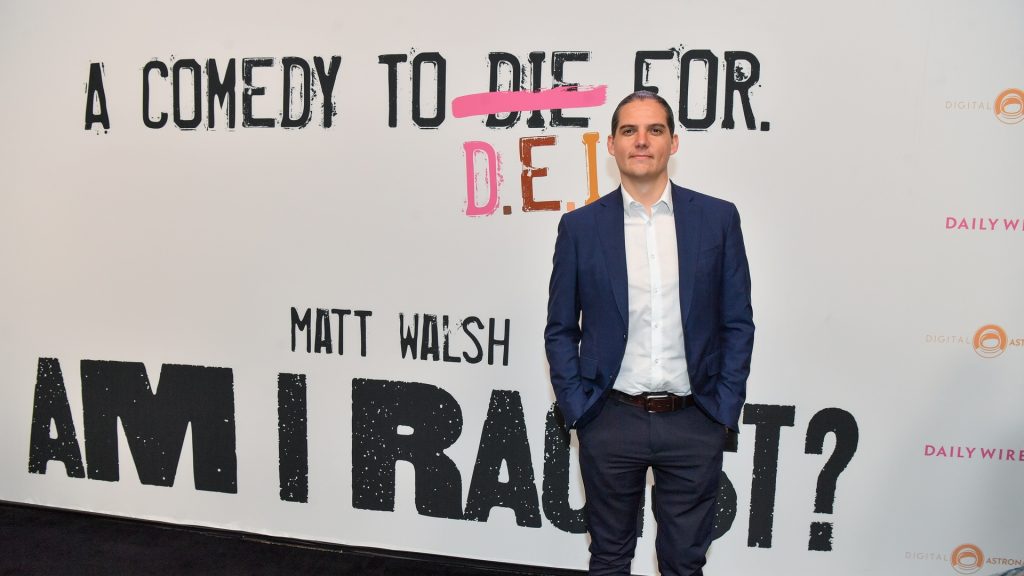

It was his Hollywood years, and Robby Starbuck was driving Lamborghinis and directing music videos for Snoop Dogg and the Smashing Pumpkins. But this wouldn’t last forever, so Starbuck switched gears.

He soon emerged as a conservative social media influencer, urging corporate America to drop such policies as diversity, equality and inclusion. On Oct. 22, Starbuck, now 36, filed his second defamation suit against a major tech company, adding to the growing number of civil cases brought by plaintiffs against artificial intelligence.

“Today, I’m filing a massive lawsuit against Google, not just for me, but for every conservative they’ve endangered, censored, discriminated against and defamed,” Starbuck said on his podcast, the Robby Starbuck Show. “Since 2023, Google’s AI products have produced defamatory answers to people who ask questions about me.”

In his case, he said, inaccurate answers by Google’s AI led to “fake mainstream news stories” that claimed he had been accused of murder and rape. His lawsuit seeks $15 million in damages.

In a statement to Straight Arrow News, Google blamed the false statements about Starbuck on AI “hallucinations.”

“Most of these claims relate to hallucinations in Bard that we addressed in 2023,” a Google spokesperson said. “Hallucinations are a well known issue for all LLMs, which we disclose and work hard to minimize. But as everyone knows, if you’re creative enough, you can prompt a chatbot to say something misleading.”

Starbuck’s lawsuit — and another that he filed against Meta this year — highlight a new frontier in U.S. defamation law: Can AI platforms be held liable for publishing material that harms the reputation of individuals, whether public figures or private citizens? Or are the platforms mere conduits that have no responsibility to ensure the accuracy of the output of their AI programs?

And if it cannot be held accountable, can anyone?

“We are seeing early challenges around questions of defamation from AI chatbots,” Jennifer Huddleston, a senior fellow in technology policy at the Cato Institute, a libertarian-leaning think tank, told SAN. “Many of these issues will be decided drawing on existing First Amendment principles as well as existing laws around issues such as intellectual property or intermediary liability that have been considered in the internet era.”

Lawsuits against AI

So far, there is no clear early winner in the legal war over AI and defamation.

In 2023, Mark Walters, the host of nationally syndicated Armed America Radio, sued OpenAI after its ChatGPT chatbot generated a false claim that Walters had embezzled money from a gun rights organization. In May, a state court judge in Georgia ruled in favor of OpenAI, dismissing the case.

The judge said Walters could not prove that the chatbot had malice toward him, a significant hurdle for plaintiffs in First Amendment suits. Also, the chatbot alerts users that it sometimes makes mistakes, the court said in granting summary judgment.

In Starbuck’s case against Google, he says one of the company’s early AI tools, Bard, produced answers to a third party’s query saying he had sexually abused children.

Starbuck also said Bard linked him to the white nationalist Richard Spencer and made other slanderous accusations. He argues that, since some people implicitly trust AI — ranging from matters of technology to medical questions to finances — the accusations harmed his reputation.

He made similar complaints in a lawsuit filed in April against Meta Platforms. He said the company’s AI program falsely reported that he participated in the riot at the U.S. Capitol on Jan. 6, 2021.

Meta and Starbuck settled outside of court with undisclosed terms. However, as part of the agreement, Meta hired Starbuck as an adviser.

Section 230 of the Communications Decency Act

Lawsuits concerning AI and defamation prompt larger, more existential legal questions about Section 230 of the Communications Decency Act. This provision of the 1996 federal law shields internet companies from lawsuits over content posted by users on their platforms. Courts are now being asked to decide whether Section 230 also pertains to content created by generative AI.

Section 230 has played a significant role in the progress of technology over the past three decades. It helped spawn the expansion of the internet and free speech in this previously uncharted online territory.

“Section 230 was written back in 1996, so the law doesn’t clearly say whether generative AI platforms are shielded from liability for the information they create,” Nick Tiger, associate general counsel for Pearl, a platform that offers human professionals to verify AI content, told SAN.

“Today’s AI-generated content exists in a gray area in which it could be reasonably designated as both old information from other sources repeated or aggregated by a digital platform and newly developed content that carries the full liability of speech online,” Tiger said.

But Huddleston, of the Cato Institute, holds that Section 230 has been a crucial player in allowing the internet to expand free speech online.

“Many of the questions around AI and defamation will be similar to those in previous eras,” Huddleston told SAN. “However, AI may also raise novel questions, including who is the speaker when defamation occurs or when the injury actually occurs.”

The future

Whether AI platforms are liable for their output is likely to be the subject of more litigation.

“When thinking about Section 230 in the generative AI world, the key will be to go back to the first principles that Section 230 reflects,” Huddleston said. “Ideally, we want platforms to be able to develop with content moderation strategies that can support the needs of the community they want to serve.”

Huddleston shared an example that a platform for the Jewish community does not have to carry antisemitic content just because it offers a platform “more generally.”

“It also reflects the idea that the person responsible for the harm that may occur from defamation … is the person who said it, not the place it occurred,” Huddleston said. “Many proposed changes to Section 230 would risk undermining either of these principles.”

Section 230 could be amended to clarify whether AI platforms should be treated as content providers when they influence or control the content output.

“Once this designation is defined, there could be certain carveouts for high-risk areas such as legal or medical topics,” Tiger said. “This move would continue to protect the public by holding AI platforms responsible for responses that create damaging outcomes.”

“Until Congress clarifies Section 230, all of these cases exist in the dubious gray area between immunity and liability,” Tiger said. “Ultimately, courts — or Congress — will have the final say on determining where Section 230’s boundaries lie, and it cannot come soon enough.”

The post Lawsuits try to hold AI companies accountable for defamatory content appeared first on Straight Arrow News.